Tentative answer: Only at the edges, and only minimally.

By "the edges", we mean that AI can be deployed generatively and usefully for menial tasks that enhance and elevate human capacities.

Specific examples

- Image classification — for example, a webcam inside a community fridge that watches for goods being placed & removed, and updates shopping lists and/or resource accounting ledgers.

- Voice transcription — for example, enabling a resource-efficient dictation pipeline that allows voice notes taken on a mobile device to be automatically synchronised across a local network and then transcribed or diarized into text.

- Voice-activated commands — for example, an ability to trigger actions on a laptop computer by asking the computer to perform specific, pre-defined tasks such as playing music or opening websites.

- Speech synthesis — such as giving driving directions or reading ebooks aloud.

When deployed in these manners, AI has the effect of augmenting human power rather than replacing human power. We assert that AI should have no place as a decision-making agent, except perhaps under very carefully managed and scrutinised conditions.

We keep in mind that:

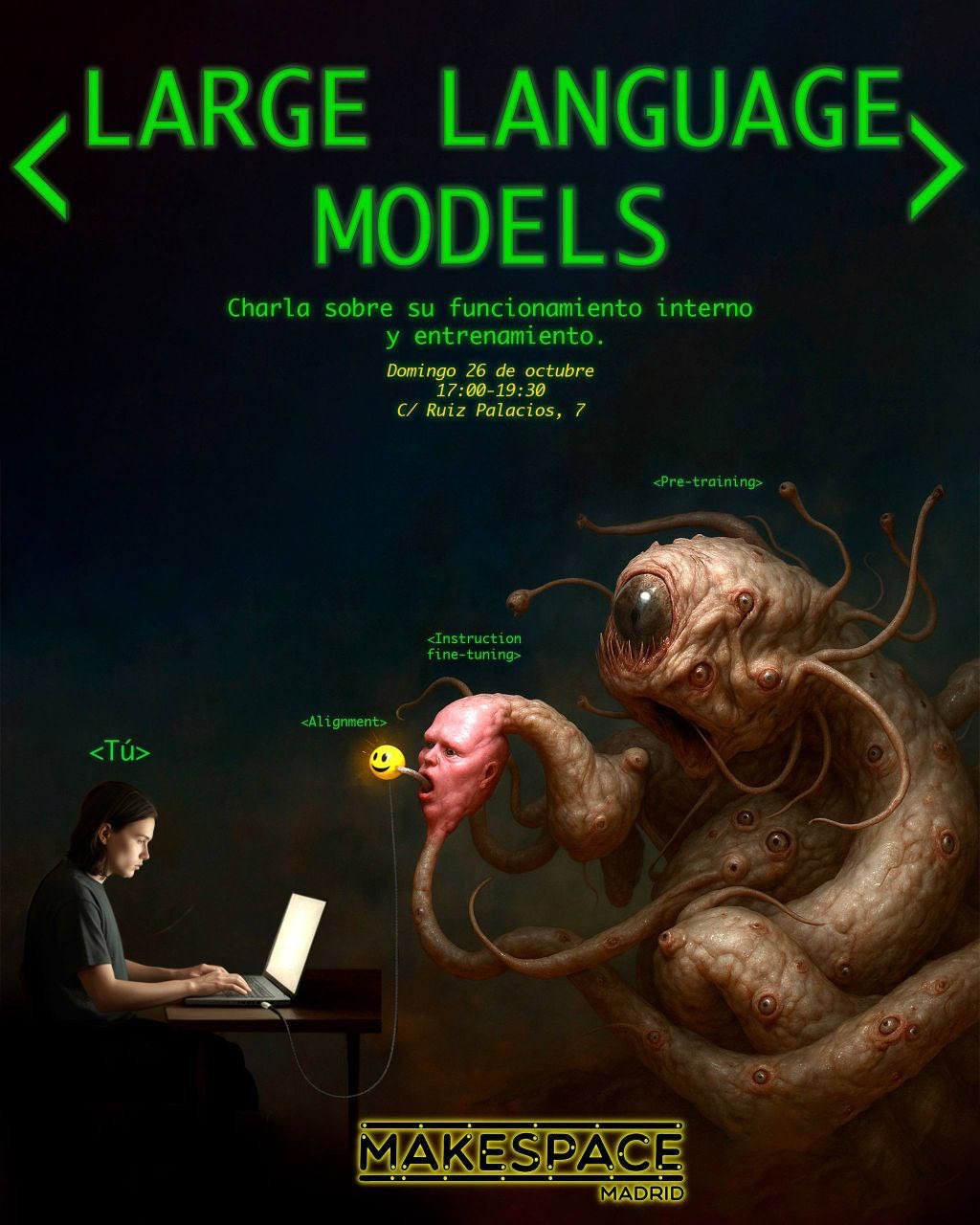

- Mainstream hosted LLM technologies such as ChatGPT and Claude are technofascist tools of the neoliberal surveillance state and the Colonial fascist-industrial military complex; and their negative social, political & environmental externalities should be resisted at all costs. Local-first solutions should be used wherever possible, in order to more ethically re-internalise previously unacknowledged harms inherent in the training of AI models.

- Use of GANs for image generation mostly degrades human creative potential. Why do we crave such hyper-real, polished imagery? Is there not beauty in more spontaneous and sketchy art-forms? Where is the "art" in typing some words into a box, and having a tortured image prism spit something back out and refine it? (And by the way- generally, in the current state of the art, it is also fairly straightforward to sense the eerie 'AI aesthetic' when confronted with it. It's not sexy- it's creepy. Please stop it.)

- Use of LLMs as thinking or brainstorming tools inherently has a tendency toward degrading human cognitive capacity, and also in sucking 'playfulness', ludic wisdom & field intelligence out of the human social sphere- supplanting these deep knowings with the White Western normalisms embedded within LLM, GAN & other neural network training data.

With this last point said, it is important to be wary of ableist thinking creeping into our opinions about LLMs and AI. Though I have not yet explicitly spoken to accessibility in this series of posts, it should run to the core of all efforts in cybernetics.

In particular, voice-directed input/output and thinking augmentations can be useful for individuals who suffer from mobility and neurological health issues. We should take care to understand their needs, and to support their self-determined embrace of AI technologies as beneficial to their agency and autonomy.

Perhaps, with more equity- AI can be deployed generatively and usefully for menial tasks that enhance and elevate human capacities.